When using metrics to make decisions about your code, it’s fundamentally important that those metrics are 100% correct. You need to have absolute faith in the reported numbers. If this is not the case, you risk making decisions and taking action through inaccurate data, and risk making incorrect decisions. By default, there’s a critical flaw in how code coverage is measured by Xcode for iOS apps. From the moment you setup unit tests for a project, Xcode will automatically identify code as “covered” for anything that is triggered through the normal application launch sequence, such as your application delegate. This means that your code coverage numbers will be artificially inflated! And broken code coverage in Xcode means you won’t fully understand how well your app is tested.

Let me say this one more time to it sinks in, your iOS code coverage numbers are not correct unless you take specific action to fix them.

An Example Of Broken Code Coverage in Xcode

First, I’m going to demonstrate to you the broken-ness of code coverage in Xcode. Then, I’m going to show you how to fix it.

To observe the broken code coverage, you are going to perform these steps:

1) Create a new empty project, including unit tests 2) Leave the unit tests empty 3) Turn on code coverage 4) Run the tests, and review coverage

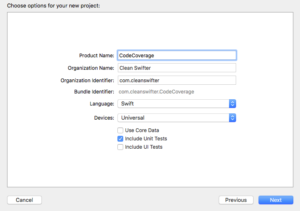

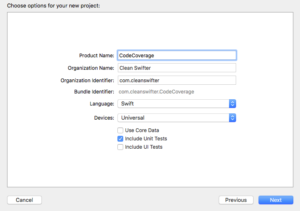

Let’s do it. First, create a new Swift iOS project called CodeCoverage. Be sure to check Include Unit Tests.

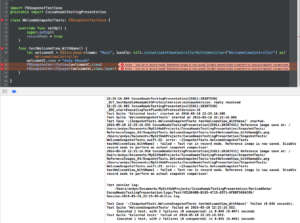

Open CodeCoverageTests.swift. You aren’t going to make any changes to this file, but notice how there are two empty test implementations testExample() and testPerformanceExample(). These tests will run and pass, but should generate 0% coverage of the application.

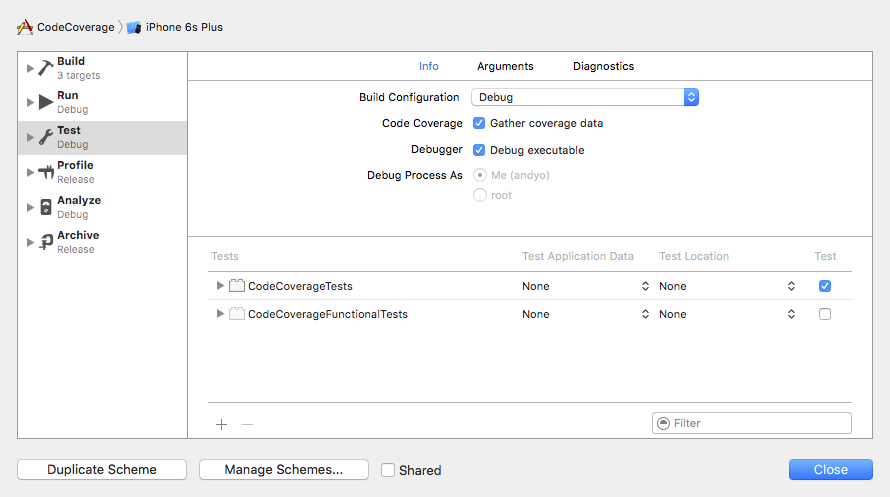

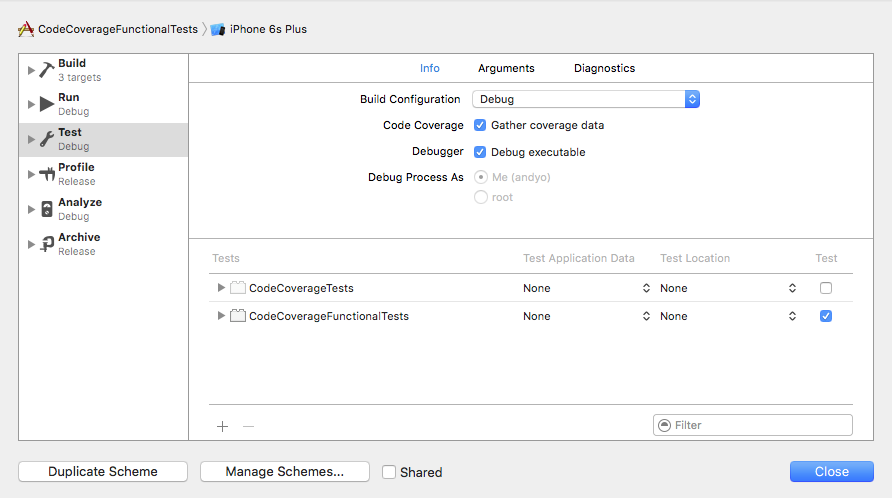

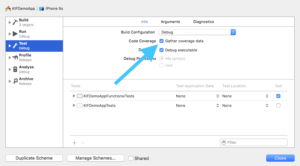

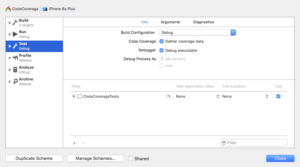

Now, turn on Code Coverage. Open the Scheme Editor and check Gather coverage data.

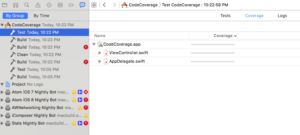

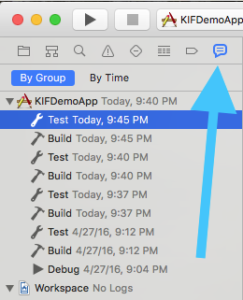

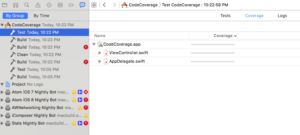

Finally, run the tests. Command-U (you only get a keyboard shortcut today :). Open the Code Coverage results from the Report Navigator.

Uhhh, what’s wrong with that picture? It should be obvious, code coverage is being shown for ViewController and AppDelegate despite there being absolutely no legitimate tests in the project.

Why There Is Broken Code Coverage in Xcode

Well, I wouldn’t blame it all on Xcode. Xcode is measuring the code that is executing when your tests execute. And technically, since you app is starting up and showing the first view controller, that code has executed, so it’s reported as covered. The thing is, by the definition of how you want to measure code coverage, that code isn’t actually “covered.” There’s a really easy way to correct this.

How To Fix Broken Code Coverage in Xcode

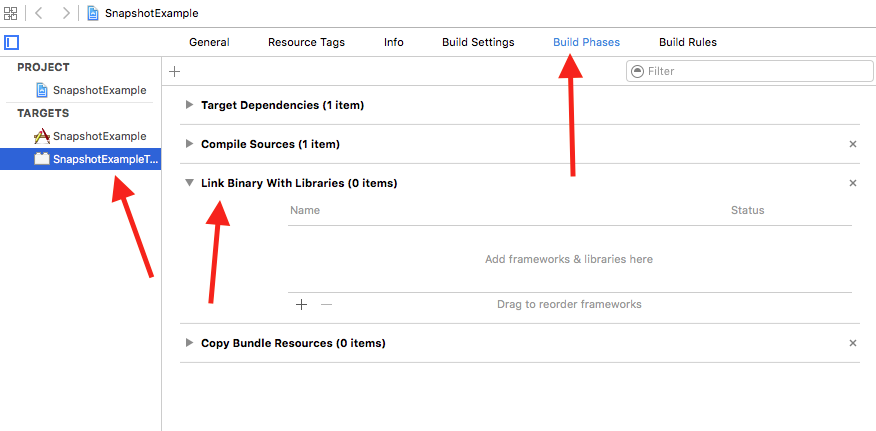

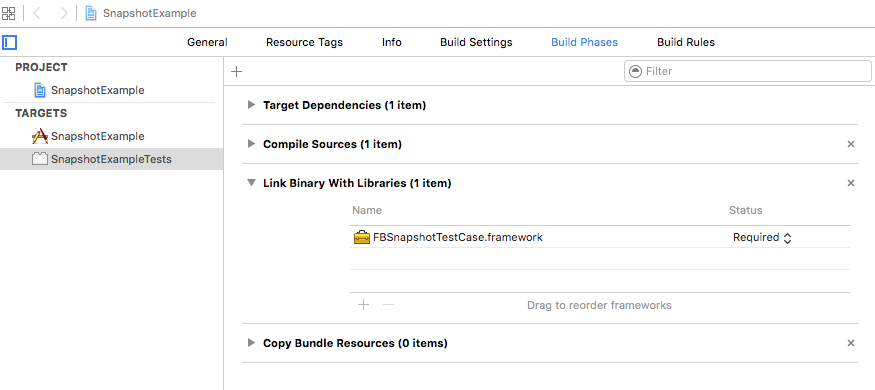

Jon Reid’s article on How to Switch Your App Delegate for Fast Tests inspired me to figure out how to fix this. You are going to create a separate app delegate that is used by your tests. This app delegate will be entirely empty, so it totally intercepts the app launch sequence. This way, no code in your real app delegate will be executed unless explicitly done so from a test, and ditto for any view controller that it would have otherwise instantiated.

Note: I want to give full attribution to Jon Reid on this code. I just figured out that it also fixes broken code coverage in Xcode.

To fix this, first open AppDelegate.swift and delete this line:

@UIApplicationMain

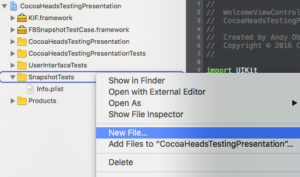

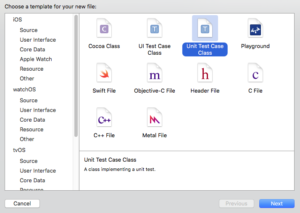

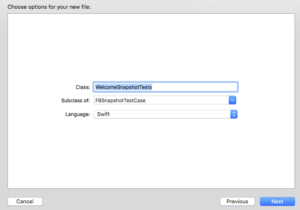

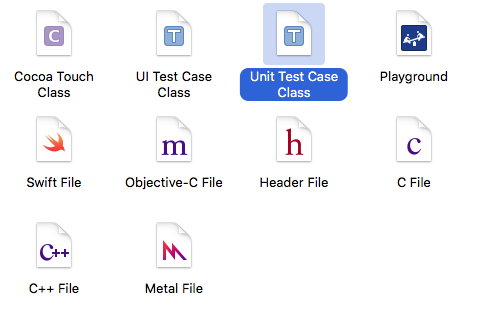

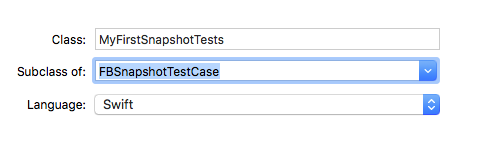

Create a new Swift file named TestingAppDelegate.swift, and replace it’s contents with:

import UIKit

class TestingAppDelegate: UIResponder {

}

This is the meat of the fix. It’s an empty implementation of an app delegate that will be used rather than your “real” app delegate.

Create a new Swift file named main.swift, and replace its contents with:

import UIKit

let isRunningTests = NSClassFromString("XCTestCase") != nil

let appDelegateClass : AnyClass = isRunningTests ? TestingAppDelegate.self : AppDelegate.self

UIApplicationMain(Process.argc, Process.unsafeArgv, nil, NSStringFromClass(appDelegateClass))

This is the first code that executes on app launch. It first checks whether XCTestCase is an available class to determine whether the app is being launched from tests or not. Depending on the result, a decision is made as to which app delegate should be used – the real one, or the empty one.

That’s it. Now re-run your tests and open your coverage report.

Note: You may need to Clean for a successful build.

Woohoo! 0% coverage. Ya, that’s the only time you’ll ever be happy about 0% coverage, but in our case, we have no legitimate tests, so it’s what we want! Yay, we fixed our code coverage.

Side Benefit: Faster Tests

A side benefit of this fix for correcting broken code coverage in Xcode is that your tests will run faster. By alleviating the simulator of bootstrapping a significant portion of app startup, you’ll save that time each test run. I just compared the before state and after state of this fix on one of my current projects where we have about 500 unit tests. Before the fix, the tests ran in 21 seconds. After the fix the tests ran in 19 seconds. That’s about a 5% speed increase. Multiple 2 seconds over the large number of times that the tests will be run, and that’s a lot of time.

Looking forward

I added the final project to GitHub at https://github.com/obuseme/CodeCoverage. I hope that you find use in this approach. Just remember, you want 100% confidence in your code metrics. For me, if I notice something wrong with one of my code metrics, I stop using it until I get to the bottom of the false data.

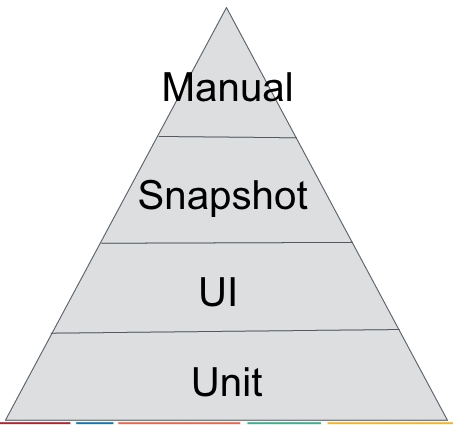

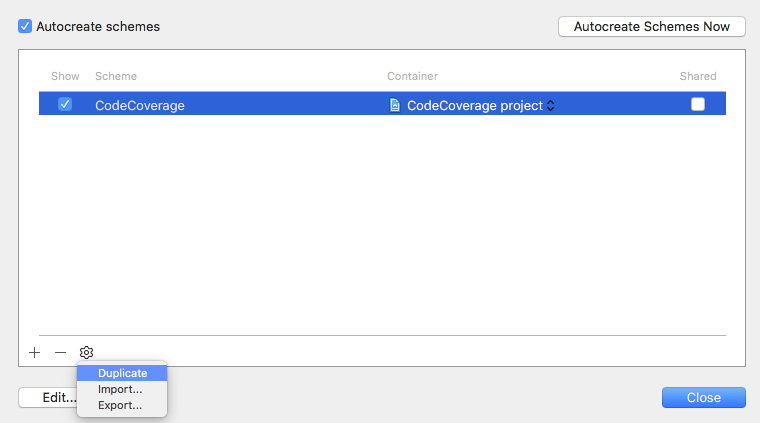

Tomorrow, I want to show you how you can gather separate code coverage metrics for your different types of tests. Hint, it involves some crafty Scheme creation.

Happy cleaning.